TL;DR: AI creates engines for relentless optimization at all levels. Read the article to figure out how and its consequences to what you’re doing.

Some time ago I wrote about how [everything is a model](https://deliprao.com/archives/262) when reviewing a paper from Kraska et al (2017) where they show how traditional CS data structures like B-Tree indexes, hashmaps, and Bloom Filters, can be “learned” with a model, and that these model-driven indexes often outperformed carefully constructed algorithmic indexes. I was so captivated by that paper that I called it a “wake-up call to the industry”, and noted:

Anywhere we are making a decision, like using a specific heuristic or setting the value of a constant, there is a role for learned models.

In other words, machine learning will permeate into everything we know. Since then:

1. DeepMind wrote papers on how they optimized data center power consumption by at least 40% using machine learning. Implication: Google can continue building increasingly complex datacenters and maintain computation supremacy.

2. Google reported using machine learning to optimize the placement of massive neural networks on multiple GPUs/TPUs for efficient training. Implication: Google can use this to train large-scale neural networks that their competition cannot. Many of these large-scale networks will enable capabilities that are unique to Google.

3. Google shows how deep learning could be used to learn memory access patterns. Implication: Google can use this to build “smart” caches everywhere in their stack to address the von Neumann bottleneck of memory performance better than ever before.

4. Google reports about GAP, a deep learning-based approximate graph partitioning framework that outperforms baseline methods by a factor of 100. Implication: Google deals with large scale graphs (web-graph, social networks, street-graphs for maps, etc) and graph partitioning is the first step before running algorithms like PageRank, recommenders, direction routing, etc. Each partition of the graph can then be processed independently using distributed algorithms. Other applications could include placing neural networks on multiple TPUs, efficiently placing transistors to chips in TPU design, .. just to name a few. Faster graph-partitioning means increasing the ability of Google to tackle large-scale problems (i.e. large graphs) at agility that their competition cannot.

5. Earlier this month, Martin Mass, David Andersen, Michael Isard, Mohammad Javanmard, Kathryn McKinley, and Colin Raffel, outlined a novel Learned Lifetime-Aware Memory Allocator that reduces memory fragmentation by up to 78% in production server workloads. The paper is interesting, but follows a similar pattern as the Kraska et al (2017) paper — create a trace of a system execution and use that trace to construct a supervised model and use the conventional algorithmic solutions to handle exceptions. (Aside: Mass et al don’t cite the clearly related Kraska et al paper.) Implication: Google servers can now serve similar loads using fewer resources ensuring better availability across all Google products and services.

Jim Collins in his timeless book, “Good to Great“, conjures the image of a person pushing a flywheel.

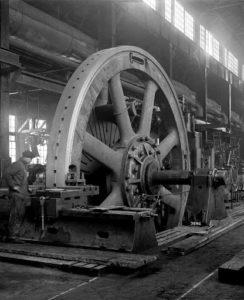

Picture a huge, heavy flywheel—a massive metal disk mounted horizontally on an axle, about 30 feet in diameter, 2 feet thick, and weighing about 5,000 pounds. Now imagine that your task is to get the flywheel rotating on the axle as fast and long as possible. Pushing with great effort, you get the flywheel to inch forward, moving almost imperceptibly at first. You keep pushing and, after two or three hours of persistent effort, you get the flywheel to complete one entire turn. You keep pushing, and the flywheel begins to move a bit faster, and with continued great effort, you move it around a second rotation. You keep pushing in a consistent direction. Three turns … four … five … six … the flywheel builds up speed … seven … eight … you keep pushing … nine … ten … it builds momentum … eleven … twelve … moving faster with each turn … twenty … thirty … fifty … a hundred.

Then, at some point—breakthrough! The momentum of the thing kicks in in your favor, hurling the flywheel forward, turn after turn … whoosh! … its own heavy weight working for you. You’re pushing no harder than during the first rotation, but the flywheel goes faster and faster. Each turn of the flywheel builds upon work done earlier, compounding your investment of effort. A thousand times faster, then ten thousand, then a hundred thousand. The huge heavy disk flies forward, with almost unstoppable momentum.

The papers I list in this post are an illustration of how AI is working itself into everything at companies like Google (and possibly Facebook & Amazon). Each work is like a small push to the giant flywheel. While each push appears imperceptible, like the Collins Flywheel, they add up to create a momentum the world hasn’t seen. These AI Flywheels will be unstoppable and will produce newer versions of monopolies that will dwarf their pre-existing selves, benefiting their shareholders and end-users with faster, cheaper, better products.